Google DeepMind launches SIMA 2, the AI agent capable of reasoning and planning in video games

One year after introducing the original SIMA — an agent capable of following over 600 simple instructions across various virtual worlds — Google DeepMind has returned with a significantly more ambitious version.

SIMA 2 marks a decisive shift: it is no longer just about obeying commands like “turn left” or “open the map”; it is a genuine agent capable of reasoning, planning, understanding a larger goal, and even advancing autonomously.

From simple executor to a reasoner capable of explaining its actions

DeepMind has integrated Gemini into SIMA 2, and this changes everything. The agent no longer just analyzes commands; it interprets intentions, explains its choices, and can describe step by step how it plans to achieve a goal. Training relies on both human-annotated videos — similar to the first generation — and automatically generated labels from Gemini.

SIMA 2 better understands long chains of actions, accepts drawings or sketches as instructions, responds in multiple languages, and can even react to emoji cues. It’s a much more flexible agent capable of almost human-like interactions.

It adapts to games it has never seen before

While SIMA 1 was heavily reliant on the environments in which it was trained, SIMA 2 demonstrates true generalization capabilities.

The agent managed to follow complex instructions in ASKA, a Viking survival game, and in MineDojo — a research environment inspired by Minecraft — even though it had never been specifically trained on these games.

Moreover, SIMA 2 transfers its knowledge from one world to another: what it learns about resource extraction in one game can help it “harvest” in another. This marks a significant step towards truly cross-functional skills.

With Genie 3, SIMA explores procedurally generated worlds

DeepMind enhances the experience further by coupling SIMA 2 with Genie 3, its real-time 3D world generator. The agent can thus enter an environment created from a simple image or text prompt and navigate it as if it were an actual game.

This ability to adapt to entirely new worlds is precisely what researchers in “embodied AI” seek.

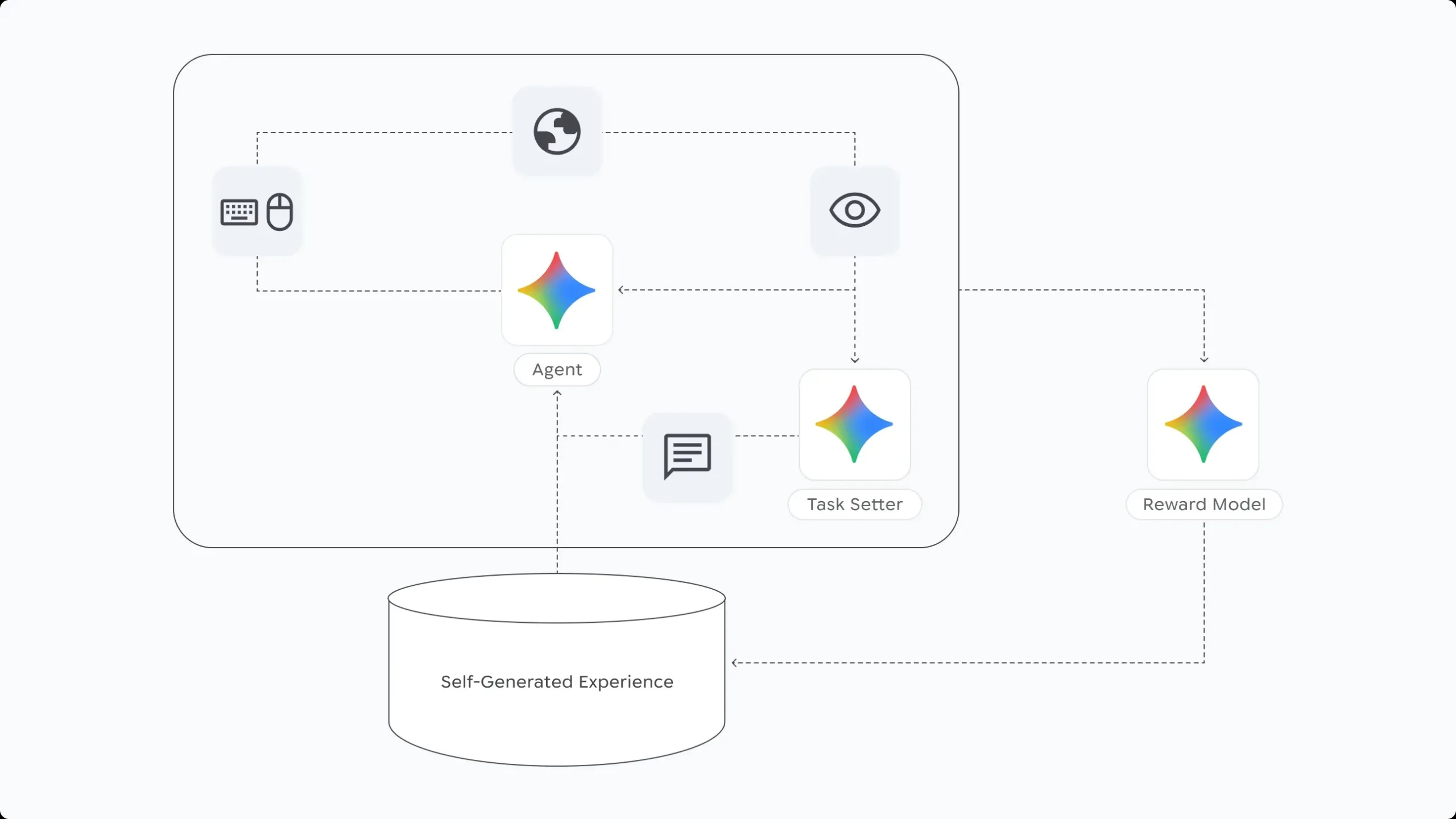

An AI that learns on its own while playing

One of the most interesting new features is SIMA 2’s ability to improve without human assistance. After an initial supervised phase, the agent generates its own training data by exploring environments, attempting actions, failing, and then retrying.

DeepMind describes this as a virtuous cycle: each version of SIMA builds on the experiences gathered by the previous one. This allows for a gradual increase in task difficulty and a widening of scenarios without needing more human annotators.

The dawn of embodied general AI

What SIMA 2 learns in games — moving, planning, using a tool, coordinating actions — is far from trivial for Google.

These are precisely the fundamental building blocks of an embodied intelligence capable, in the long run, of interacting with the real world. DeepMind does not hide this fact: SIMA 2 serves as a living laboratory for future autonomous robots, intelligent assistants, and systems capable of understanding space, movement, and objects.

However, not everything has been resolved yet: the agent still struggles with very long tasks, fine precision actions, complex visual understanding, and long-term memory.

A very limited deployment under supervision

SIMA 2 is currently reserved for a select group of researchers and game studios. DeepMind emphasizes that all self-improvement capacities are still supervised and closely monitored. The teams are actively seeking interdisciplinary feedback to responsibly guide this type of AI.

SIMA 2 is not yet a general agent, but it clearly represents one of the most concrete advances towards systems capable not only of obeying but also of understanding, exploring… and self-improving.