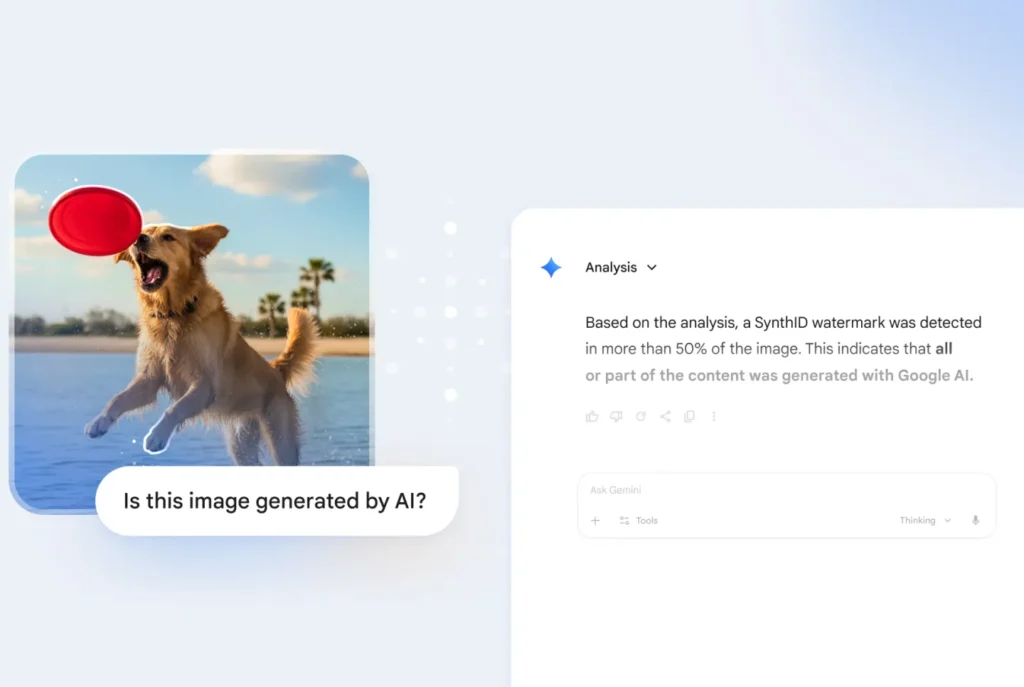

Google allows you to ask Gemini if an image was created by AI

Google takes a significant step towards transparency in AI-generated content. As of today, it is now possible to directly ask Gemini whether an image was created or modified by a Google AI tool.

This seemingly simple feature could become essential as the line between real and synthetic continues to blur.

“Is This AI-Generated?”: The Image Verification in Gemini

Currently, this verification solely relies on SynthID, the invisible watermark developed by Google DeepMind. Users can upload an image in the Gemini app and ask: “Is this AI-generated?”

Gemini will then confirm whether the image was generated by a Google model or edited using one of their tools (e.g., Nano Banana, Nano Banana Pro, Canvas).

This initial version only applies to images, but Google promises that video and audio support will follow “soon”— a critical addition in the age of high-fidelity deepfakes.

The company also plans to integrate this feature directly into Search, enabling anyone to verify the origin of online content.

Towards Enhanced Traceability with the C2PA Standard

Currently, detection relies exclusively on the in-house technology SynthID. However, Google is announcing the next step: a complete integration of the C2PA standard, which is being adopted by an increasing number of industry players.

This will enable Gemini to identify content generated by:

- OpenAI (e.g., Sora),

- Adobe Firefly,

- TikTok and its AI tools,

- and many other C2PA-compatible creative solutions.

In other words, a step towards real interoperability in the fight against fake content, far more powerful than a proprietary watermark.

It’s worth noting that Nano Banana Pro, Google’s new image model presented today, will consistently generate images with integrated C2PA metadata. This initiative aligns with TikTok, which announced this week the integration of C2PA into its own invisible watermark.

A Useful Advance… But Insufficient Until Platforms Follow Suit

This manual verification is an important advancement: it provides users with an immediate control tool. However, it also reveals a structural weakness: responsibility still falls on the end user, while most social platforms do not automatically detect AI-generated content.

As long as social networks do not integrate these reporting systems themselves, detection will remain a voluntary act— and thus limited in effectiveness.

The widespread adoption of C2PA metadata is a necessary step, but it is the systemic adoption by platforms that will determine the success of this initiative.

A Key Step in the Battle Against Visual Misinformation

With Gemini able to recognize its own creations and soon those of other AI tools, Google is laying the groundwork for a trustworthy ecosystem surrounding generative images.

In a world where deepfakes become indistinguishable from reality, this transparency—even if partial—is no longer optional but essential.

The real question now is: will the industry keep pace sufficiently to make these tools impactful on a large scale?