Cloudflare: A Major Outage Related to Bot Management Brings Down Part of the Web

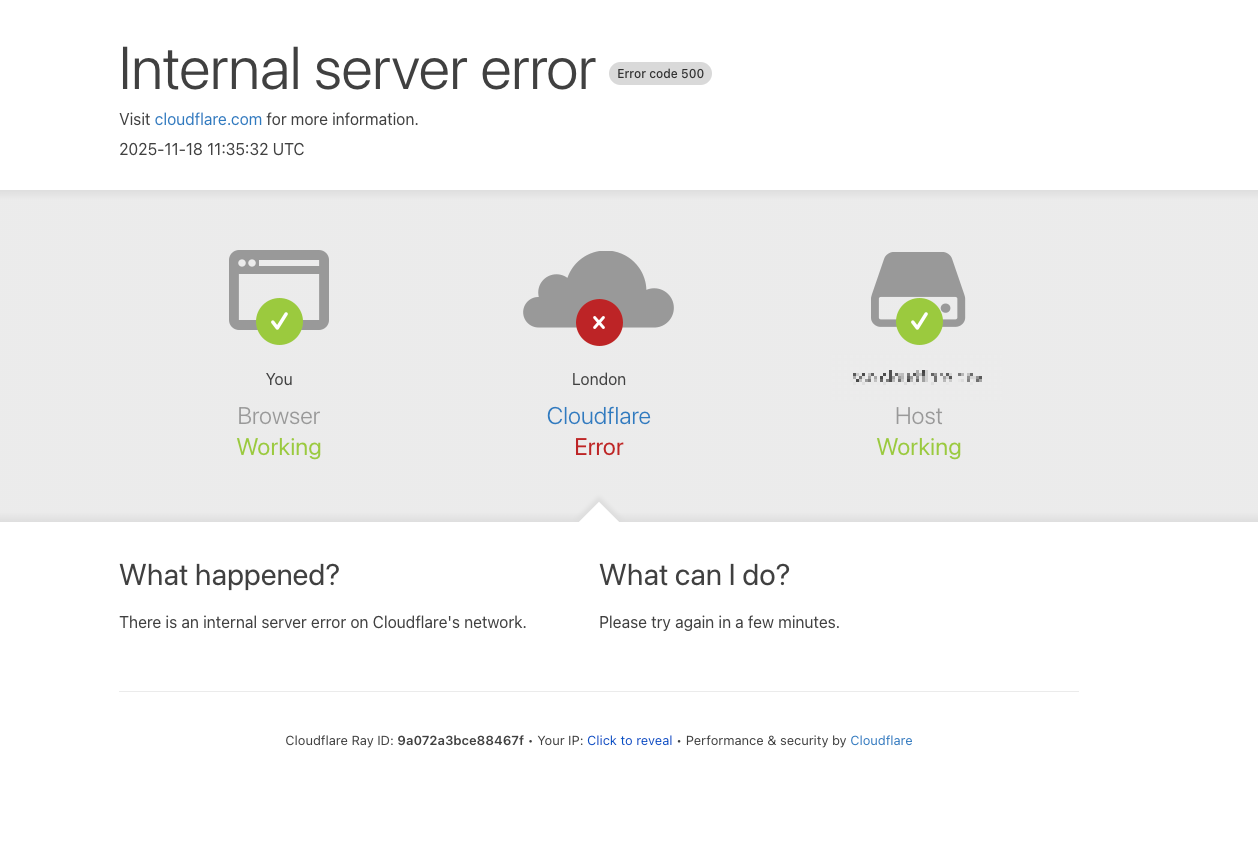

The web shook for several hours. This Tuesday, Cloudflare experienced its most significant outage since 2019, paralyzing a large part of the Internet, affecting services from X to ChatGPT and including Downdetector.

The cause? A malfunction in the core of the Bot Management system, designed to identify and manage automatic crawlers.

A Systemic Outage in One of the Web’s Infrastructure Pillars

For years, Cloudflare has claimed that around 20% of Internet traffic passes through its network. Its mission: to absorb traffic surges, block massive DDoS attacks, and ensure site availability amidst sudden traffic fluctuations.

But on Tuesday, it was the structure itself that faltered.

The crash rendered many major services inaccessible, recalling previous global outages related to Microsoft Azure or Amazon Web Services. Once again, this highlights the fragility of a centralized Internet where key players become critical points of failure.

The Issue Was Neither with Generative AI, DNS, Nor an Attack

Matthew Prince, co-founder and CEO of Cloudflare, published a detailed article tracing the problem’s origin. Contrary to initial hypotheses — including a potential DDoS attack — the incident stemmed from an internal malfunction related to a database permission system.

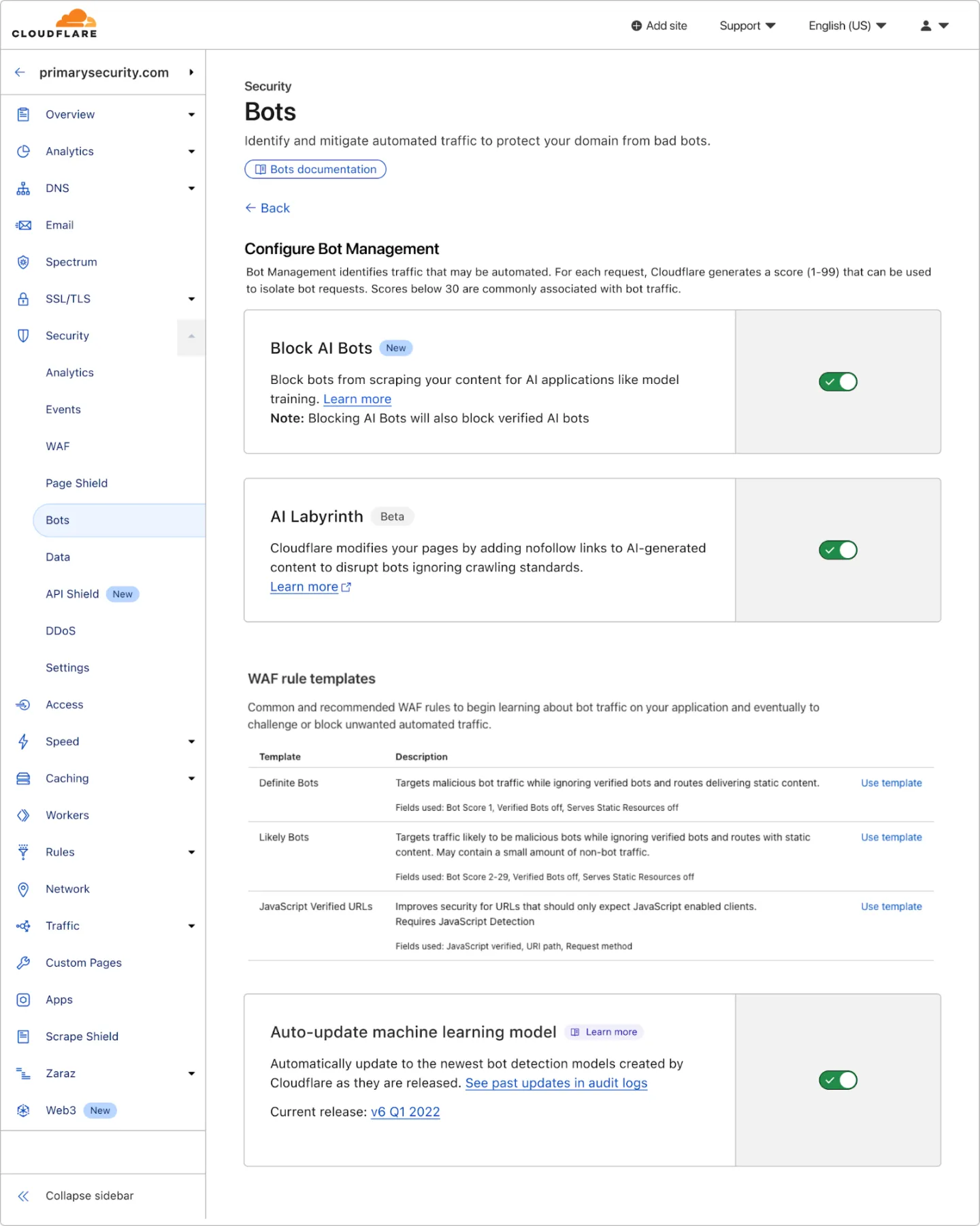

More specifically, it was related to the component responsible for generating bot scores, essential for distinguishing human requests from legitimate or malicious bots.

A Change in ClickHouse Caused the Malfunction

The machine learning powering Bot Management relies on a configuration file that is frequently updated. However, a change in the behavior of ClickHouse requests responsible for this file led to a multiplication of duplicated “feature rows.”

The result: the configuration file ballooned in size, exceeding the planned memory limits, which ultimately caused the central proxy handling traffic dependent on the bot module to crash.

Clients using rules based on the generated scores saw their legitimate traffic blocked — resulting in a massive series of false positives.

In contrast, clients not using these scores… were unaffected.

AI Bots Are Not to Blame, But the Concern Remains

Cloudflare has recently intensified its efforts against crawlers used to train generative AIs. Notably, the announcement of the AI Labyrinth system was made to trap bots ignoring “no crawl” directives.

However, Prince emphasizes that this outage was unrelated to new AI-based tools. It was a process error, not a failure of experimental technology.

Cloudflare Promises Structural Changes

To prevent such an incident from happening again, the company announced four key measures:

- Tightening the management of configuration files generated by Cloudflare as if they were coming from external users.

- Enabling more global switches to quickly disable features in case of malfunction.

- Limiting the capacity of core dumps and system errors from exhausting resources.

- Reviewing all failure modes of critical proxy modules.

A comprehensive technical response, but one that does not address the fundamental question: the Web is increasingly reliant on centralized infrastructures, and each vulnerability becomes a systemic risk.

An Outage That Raises Broader Questions

This Cloudflare incident joins a troubling series affecting Microsoft, AWS, and other giants. As the Web increasingly relies on a few central players, outages are becoming less frequent but far more impactful.

The modern Internet is not just a distributed network: it is an ecosystem where some nodes have become vital. And when one of them falters, a part of the connected world shakes.