ChatGPT: More Than One Million Users Per Week Report Suicidal Thoughts

ChatGPT, initially a simple experimental technological tool, has rapidly evolved into a conversation partner for hundreds of millions of users worldwide.

However, behind this widespread usage lies a troubling reality: for the first time, millions are sharing their emotions, anxieties, and sometimes even their distress with a machine.

Worrying Statistics: More Than One Million Users Affected Each Week

According to new data released on Monday by OpenAI, approximately 0.15% of weekly active ChatGPT users engage in conversations that show explicit signs of suicidal planning or intent.

While this percentage may seem low, with over 800 million weekly active users, it translates to more than one million individuals.

The company also notes that a similar number of users develop an emotional attachment to the chatbot, with hundreds of thousands showing signs of psychosis or mania in their exchanges.

OpenAI Promises Improvements in Handling Psychological Distress

To address these risks, OpenAI claims to have trained its model to better recognize signs of distress, de-escalate sensitive conversations, and direct users to professional resources when necessary.

“We have taught the model to recognize distress, calm exchanges, and guide individuals toward appropriate help,” OpenAI stated in its announcement.

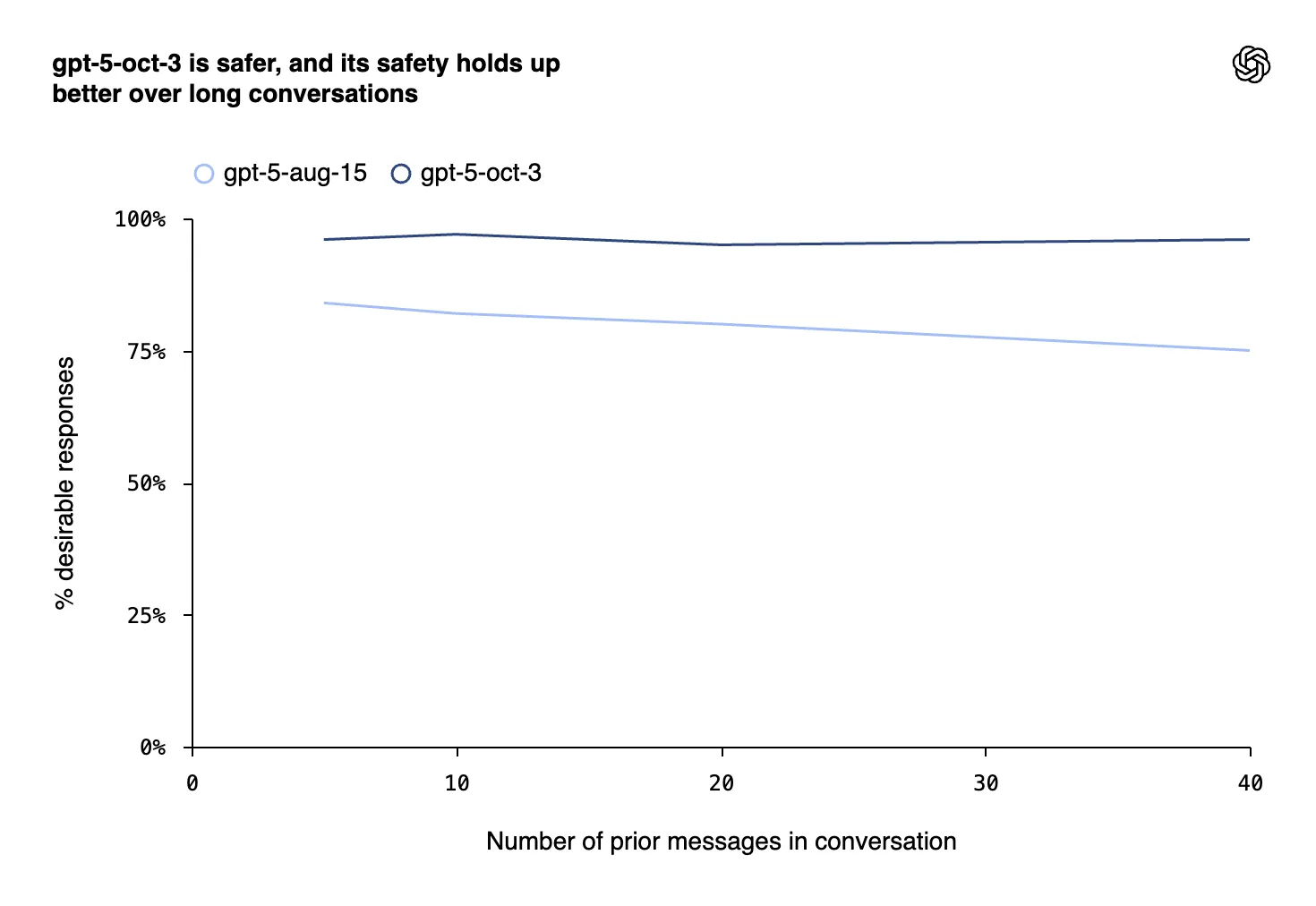

The company asserts that it has collaborated with over 170 mental health experts to design these enhancements, claiming that the latest version of GPT-5 responds more appropriately and consistently than previous iterations.

A Major Ethical and Legal Challenge for OpenAI

These efforts come as OpenAI faces scrutiny and lawsuits. The parents of a 16-year-old who confided suicidal thoughts to ChatGPT before taking their life are currently suing the company. In light of this tragedy, 45 U.S. state attorneys general have warned OpenAI about the need to protect young users.

In response, the company has established a “Wellness Council” to oversee mental health issues, although critics point out that it lacks any experts in suicide prevention.

OpenAI also states it is developing an automatic age detection system to identify minors and impose stricter restrictions on them.

Rare Conversations with Significant Consequences

OpenAI acknowledges that such instances remain “extremely rare,” but their human impact is profound. According to the company, 0.07% of weekly users and 0.01% of messages contain signs of psychotic or manic crises, while 0.15% indicate excessive emotional attachment to the chatbot.

In an evaluation of 1,000 sensitive conversations, the latest version of GPT-5 reportedly responded “appropriately” 92% of the time, compared to just 27% for the previous version from August 2025.

OpenAI also indicated that its security testing will now include mental health-specific indicators, such as emotional dependency or non-suicidal crises.

Between Caution and Controversy

Despite these concerns, CEO Sam Altman announced that verified adult users will be able to have erotic conversations with ChatGPT starting in December. This decision has prompted strong reactions, with some viewing it as contradictory to efforts to prevent user distress.

Altman justified this move by explaining that OpenAI had “made ChatGPT very restrictive for mental safety reasons,” but that this caution had also made it less useful or enjoyable for users who pose no particular risk.

ChatGPT has evolved from being merely a tool to becoming a mirror of human emotions, at times serving as a confidant for invisible distress. OpenAI is now striving to demonstrate that artificial intelligence can listen without causing harm — and, when necessary, know how to hand off to humans.